ViewinterHR is the solution that best responds to the current privacy laws in Korea.

15 March, the General Data Protection Regulation comes into force with some provisions, including the new right to automated decisions, including artificial intelligence (AI). ViewInterHR is one of the fastest in the industry to respond to these changes.

Many organisations and individuals wonder how to respond to the recent changes to automated decision-making laws. At ViewInterHR, we've been working closely with the Information Commissioner's Office to prepare for these changes before they were proactively proposed.

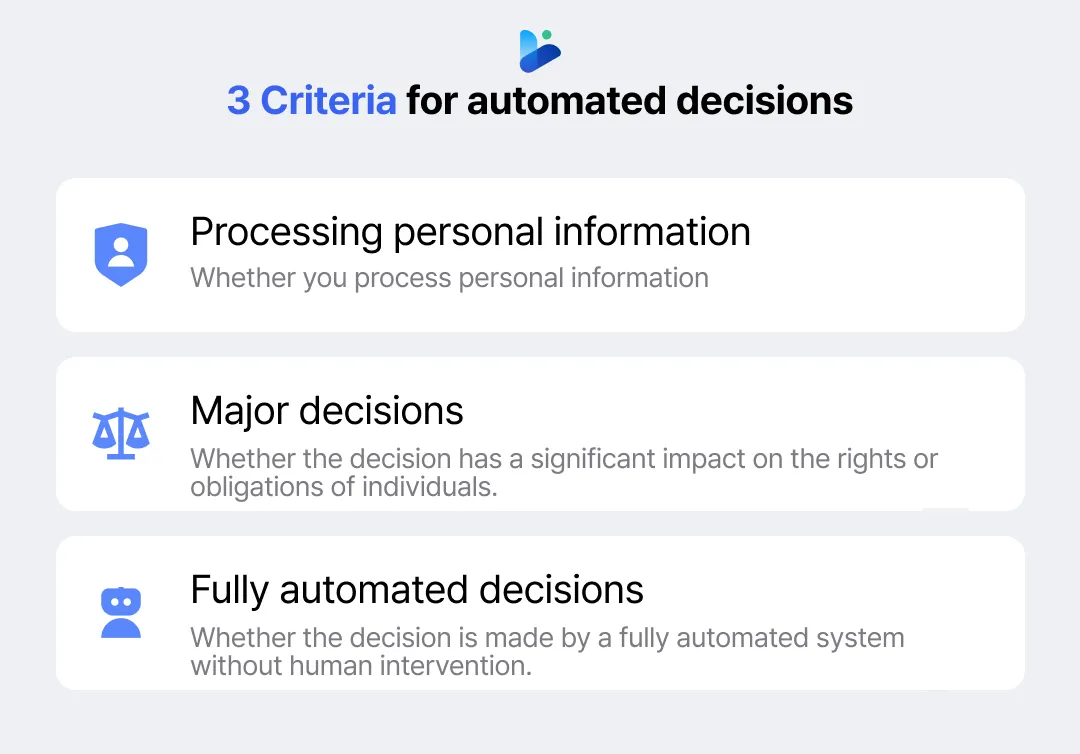

Today, we'd like to explore this topic further and provide a detailed preparation guide. Automated decision-making is ⓐ automatically processing information, including personal data, by a computer algorithm or system ⓐ without human intervention ⓐ to make a significant decision.

It's important to note that all three conditions must be met for a decision to be classified as automated. If all three conditions are met, the data subject has a legal right to object to the decision.

So, let's take a closer look at what constitutes an automated decision.

First, we need to understand the concept of automated decisions and their criteria in more detail. The main criteria are divided into three main categories:

These three criteria can help you determine which situations constitute automated decisions. As the processing of personal data is typically integral to the recruitment process, most recruitment-related decisions meet the first criterion.

The purpose of using AI interviewing solutions is to assess candidates more accurately and objectively. By minimising human intervention and giving all candidates a fair chance, AI interviewing solutions make hiring decisions fairer.

As a result, by applying for a job where the AI interview solution has been used, the applicant agrees to the recruitment process. The most problematic part of automated decisions is the right to veto the decision. Still, in this case, the right to rescind the application of the automated decision is not recognized.

Therefore, the areas to focus on are 'significant decisions' and 'fully automated decisions'.

The concept of a 'significant decision' can be highly subjective, making it difficult to determine its exact scope. While the Privacy Act does not clearly define a 'significant decision', the GDPR provides some hints.

The GDPR, or General Data Protection Regulation, is the data protection legislation in Europe, and the proposed amendments to our Privacy Act borrow some of its wording.

Article 22 GDPR (Automated individual decision-making, including profiling) concerns automated decisions, and its preamble, Article 71, concerns significant decisions.

The GDPR cites "automatic opt-out of online credit scoring" and "online recruitment without human intervention" as examples of significant decisions. This suggests that similar examples could be used in our privacy laws to determine what constitutes a significant decision.

(71) The data subject should have the right not to be subject to a decision, which may include a measure, evaluating personal aspects relating to him or her which is based solely on automated processing and which produces legal effects concerning him or her or similarly significantly affects him or her, such as automatic refusal of an online credit application or e-recruiting practices without any human intervention.

Such processing includes ‘profiling’ that consists of any form of automated processing of personal data evaluating the personal aspects relating to a natural person, in particular, to analyse or predict aspects concerning the data subject’s performance at work, economic situation, health, personal preferences or interests, reliability or behaviour, location or movements, where it produces legal effects concerning him or her or similarly significantly affects him or her.

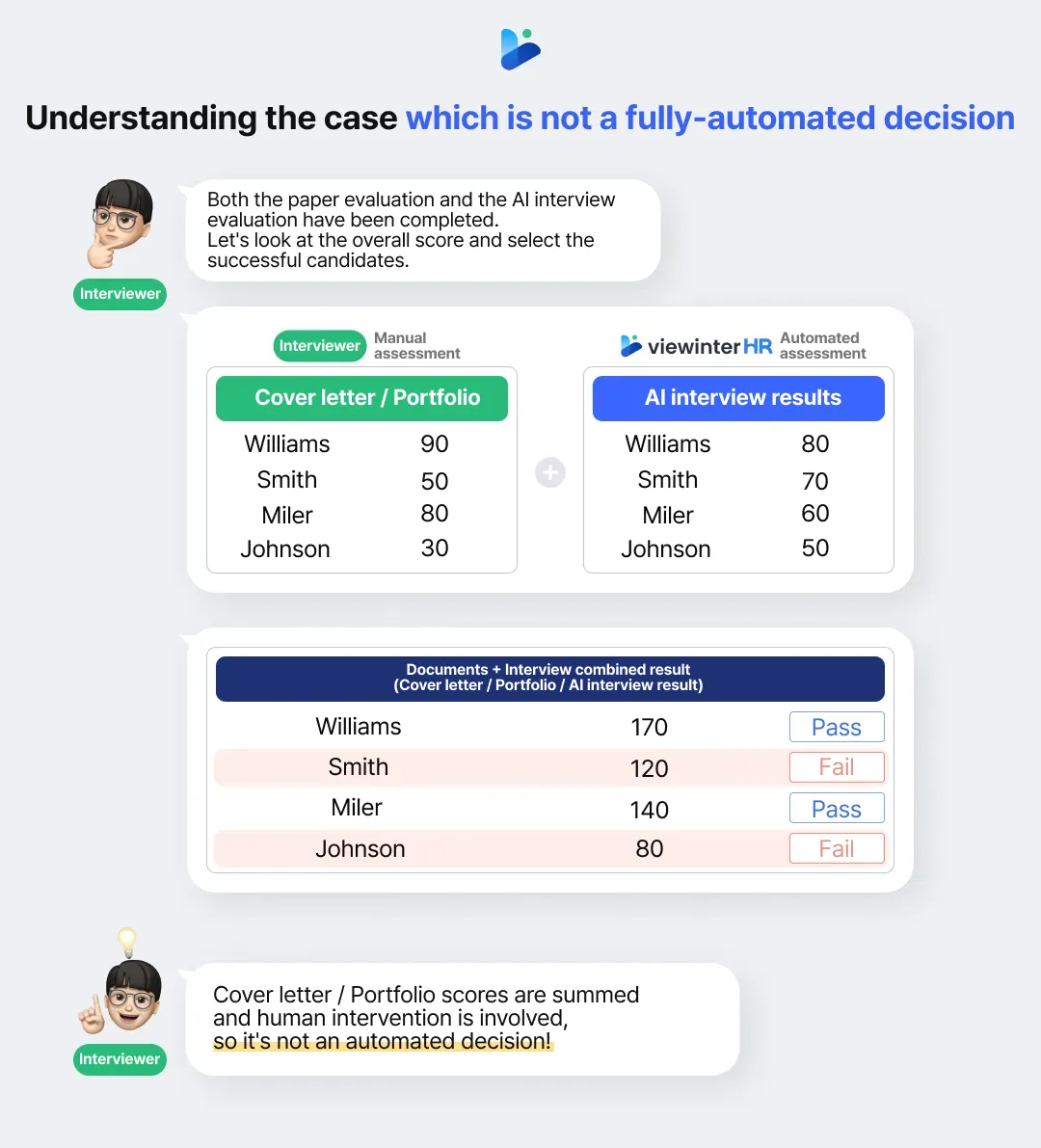

So, what is a "fully automated decision"? The definition is pretty straightforward: if the outcome reflects convincing human intervention, it's not an automated decision - human intervention is the critical factor, not the presence or absence of AI.

Here are some examples of when a hiring decision is not fully automated

On the other hand, when grading a test, if the program ⓐ checks the answers, ⓐ assigns a score, ⓐ determines a pass/fail based on that score, and ⓑ does not involve human intervention, this is automated processing.

The most critical responses to automated decisions are the ⓐ right of veto, ⓑ duty of notice, and ⓐ right to demand an explanation.

In the case of AI interviewing, once a candidate consents to the recruitment process, it becomes impossible to opt-out. This means that, in accordance with Article 15(1)(a) of the Personal Data Protection Act, the data subject cannot exercise a right of refusal; refusing to consent to the recruitment process is effectively equivalent to not applying to the company.

ViewinterHR takes two steps to protect the rights of data subjects when automated decisions are concerned

In accordance with Article 44(4) of the Enforcement Decree of the Personal Information Protection Act, ViewinterHR provides a sample prior notice for the following elements of automated decisions. :

(Please note that the actual posting is the responsibility of the company as a personal information processor)

These notices, developed in consultation with the Privacy Commissioner's Office, focus on helping you understand automated decisions and protecting your rights.

Suppose the recruitment process uses an automated decision system. In that case, the candidate can request an explanation, and the company must respond in good faith (following Article 37(2) of the Personal Data Protection Act).

ViewerHR is a leading provider of AI interviewing solutions and ensures the implementation of AI, which can be explained through feedback reports provided to candidates.

ViewerHR could meet this standard even after the Privacy Act was amended without any additional preparation, as we could clearly explain the basis for AI interview results.

While other interviewing solutions struggle to explain the AI's judgement clearly, ViewerHR adheres to the principle that "AI must be explainable".

We've written this article to guide the changes in the Personal Data Protection Act. In closing, we'd like to share two important takeaways.

① The revision of the Personal Information Protection Act means that the level and standard of AI technology can now be legally evaluated.

ViewerHR is the most advanced solution to comply with the Privacy Act; this content proves it.

ViewinterHR holds an overwhelming amount of AI and HR patents, which is unparalleled in the industry. ViewerHR has registered and filed 32 domestic and international patents for AI interviewing, and GenesisLab has registered and filed 64 patents, making our AI technology unrivalled. This is our most significant comparative advantage over other companies in our industry.

As AI enters more and more areas of our daily lives and work, everyone will be more concerned and curious about AI than they have ever been used to before. It is not always easy to accept something new in our lives. Still, it is a self-evident fact that only businesses that grow efficiently with less time/cost/effort by borrowing the power of AI will survive this difficult time and succeed.

② Validation of AI technology has become more critical than ever.

It is still difficult for non-AI experts to determine which technologies are clearly 'AI technologies' in any industry. Even in this generation, where information asymmetry is approaching zero, there are too many companies and solutions that pretend to be AI solutions without any AI technology at all.

As the first company in Korea to lead AI technology in some areas, ViewerHR is not only agile in legal responses but also pursues ethical values and works with a mission to secure AI credibility that anyone can trust.

ViewinterHR does not call our solutions AI without evidence and reliable, objective indicators. We are the only HR company in Korea to be certified by the TTA (Korea Telecommunications Technology Association) for AI reliability assessment, and a third-party professional organisation has verified our actual AI technology.

We are also the only HR company to have won the Ministry of Science and ICT's AI-related Ministerial Award, and we have won it twice. (2023 Korea AI Grand Prize, 1st Ministerial Award for AI Reliability and Quality)

GenesisLab, the creators of ViewInterHR, predicted that everyone would be judged on the superiority of AI technology and its ethical responsibilities. ViewInterHR already has AI ethics guidelines, which were created with the Korea Association of Artificial Intelligence Ethics to meet the moral standards of AI, as well as numerous consultations with the Personal Information Protection Commission to comply with the Personal Information Protection Act.

I hope that everyone reading this article will no longer be victimised by 'solutions that pretend to be AI' that do not use AI technology or have not been verified at all, as the Personal Information Protection Act has been amended to make it more difficult for companies to get it wrong and face legal sanctions.

When adopting an AI solution, it is essential to determine whether the AI is technologically capable and legally protected. If you have any questions or concerns, please do not hesitate to contact us. We will provide you with all the help you need.

Write 이도연 (HR Business Development)

Review & Edit 최성원 (Marketing)